图片服务器minio+thumbor

公司一直使用 seaweedfs 做文件存储与图片服务器,seaweedfs 很好用且性能不错,但是缺乏文件管理方案、大文件存储、对k8s的支持,为了满足以上需求我准备用OSS替换现有的文件存储方案,MINIO 是一个基于Apache License v2.0开源协议的对象存储服务。它兼容亚马逊S3云存储服务接口,非常适合于存储大容量非结构化的数据,例如图片、视频、日志文件、备份数据和容器/虚拟机镜像等,而一个对象文件可以是任意大小,从几kb到最大5T不等。

MINIO 本身并不支持图片处理,需要 Thumbor 这类型的图片处理服务配合才能构成一个图片服务器, Thumbor 是一个小型的图片处理服务,具有缩放、裁剪、翻转、滤镜等功能。

MINIO

Minio 的安装和使用都非常简单,提供完善各平台安装示例与JAVA、JavaScript、Python、Golang、.NET SDK文档,有完整的中文文档对我这种英文渣非常友好,而且它是GO语言写的,正好我有学习GO的计划有空还可以研究一下源码

安装

k8s 安装官方提供了 Helm Chart ,配制 value.yaml

image:

repository: minio/minio

tag: RELEASE.2018-10-18T00-28-58Z

pullPolicy: IfNotPresent

mode: standalone

accessKey: "admin"

secretKey: "123456"

configPath: "/root/.minio/"

mountPath: "/export"

persistence:

enabled: true

## A manually managed Persistent Volume and Claim

## Requires persistence.enabled: true

## If defined, PVC must be created manually before volume will be bound

# existingClaim:

## minio data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

## Storage class of PV to bind. By default it looks for standard storage class.

## If the PV uses a different storage class, specify that here.

storageClass: minio

accessMode: ReadWriteOnce

size: 20Gi

## If subPath is set mount a sub folder of a volume instead of the root of the volume.

## This is especially handy for volume plugins that don't natively support sub mounting (like glusterfs).

##

subPath: ""

ingress:

enabled: true

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

path: /

hosts:

- minio.domain.vc

tls:

- secretName: minio-oss-tls

hosts:

- minio.domain.vc

## Node labels for pod assignment

## Ref: https://kubernetes.io/docs/user-guide/node-selection/

##

nodeSelector: {}

resources:

requests:

memory: 256Mi

cpu: 250m

执行安装命令

helm install --name my-release -f values.yaml stable/minio

Helm 官方 Chart 库存在墙的问题可能无法安装,可以去 Github 把源码 clone 下来使用 https://github.com/helm/charts/tree/master/stable/minio

Ingress Https 参考 https://jamesdeng.github.io/2018/09/17/K8s-cert-manager.html 进行处理

使用

官网例子:

import java.io.IOException;

import java.security.NoSuchAlgorithmException;

import java.security.InvalidKeyException;

import org.xmlpull.v1.XmlPullParserException;

import io.minio.MinioClient;

import io.minio.errors.MinioException;

public class FileUploader {

public static void main(String[] args) throws NoSuchAlgorithmException, IOException, InvalidKeyException, XmlPullParserException {

try {

// 使用Minio服务的URL,端口,Access key和Secret key创建一个MinioClient对象

MinioClient minioClient = new MinioClient("https://play.minio.io:9000", "Q3AM3UQ867SPQQA43P2F", "zuf+tfteSlswRu7BJ86wekitnifILbZam1KYY3TG");

// 检查存储桶是否已经存在

boolean isExist = minioClient.bucketExists("asiatrip");

if(isExist) {

System.out.println("Bucket already exists.");

} else {

// 创建一个名为asiatrip的存储桶,用于存储照片的zip文件。

minioClient.makeBucket("asiatrip");

}

// 使用putObject上传一个文件到存储桶中。

minioClient.putObject("asiatrip","asiaphotos.zip", "/home/user/Photos/asiaphotos.zip");

System.out.println("/home/user/Photos/asiaphotos.zip is successfully uploaded as asiaphotos.zip to `asiatrip` bucket.");

} catch(MinioException e) {

System.out.println("Error occurred: " + e);

}

}

}

Thumbor

安装

Github 上的 Thumbor Docker Image 都没有最新6.6.0版本的,我 fork 一个项目改了一下 https://github.com/jamesDeng/thumbor

使用 Docker-compose examples

version: '3'

services:

thumbor:

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/thumbor:6.6.0

environment:

#- ALLOW_UNSAFE_URL=False

- DETECTORS=['thumbor.detectors.feature_detector','thumbor.detectors.face_detector']

- AWS_ACCESS_KEY_ID=admin # put your AWS_ACCESS_KEY_ID here

- AWS_SECRET_ACCESS_KEY=123456 # put your AWS_SECRET_ACCESS_KEY here

# Is needed for buckets that demand the new signing algorithm (v4)

# - S3_USE_SIGV4=true

# - TC_AWS_REGION=eu-central-1

- TC_AWS_REGION=us-east-1

- TC_AWS_ENDPOINT=https://minio.domain.vc

- TC_AWS_ENABLE_HTTP_LOADER=False

- TC_AWS_ALLOWED_BUCKETS=False

# loader

- LOADER=tc_aws.loaders.s3_loader

- TC_AWS_LOADER_BUCKET=test-images

# STORAGE

- STORAGE=tc_aws.storages.s3_storage

- TC_AWS_STORAGE_BUCKET=thumbor-storage

- TC_AWS_STORAGE_ROOT_PATH=storage

#RESULT_STORAGE

- RESULT_STORAGE=tc_aws.result_storages.s3_storage

- TC_AWS_RESULT_STORAGE_BUCKET=thumbor-storage

- TC_AWS_RESULT_STORAGE_ROOT_PATH=result_storage

- RESULT_STORAGE_STORES_UNSAFE=True

- STORAGE_EXPIRATION_SECONDS=None

- RESULT_STORAGE_EXPIRATION_SECONDS=None

restart: always

ports:

- "8002:8000"

volumes:

# mounting a /data folder to store cached images

- /Users/james/james-docker/thumbor/data:/data

restart: always

networks:

- app

nginx:

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/thumbor-nginx:1.0.1

links:

- thumbor:thumbor

ports:

- "8001:80" # nginx cache port (with failover to thumbor)

hostname: nginx

restart: always

networks:

- app

volumes:

data:

driver: local

networks:

app:

driver: bridge

启动

docker-compose -f thumbor-prod.yaml up -d

配制中 RESULT_STORAGE 也是采用 tc_aws.result_storages.s3_storage,我看大部分文章的 RESULT_STORAGE 都是 thumbor.result_storages.file_storage,然后配制Nginx进行去读取本地存储,我是考虑是如果集群部署那么每个图片的处理结果在所有实例上都有可能存在,这样很浪费空间。

注意例子中的 TC_AWS_STORAGE_BUCKET=thumbor-storage、TC_AWS_RESULT_STORAGE_BUCKET=thumbor-storage 的 bucket 都需要手动去 Minio 创建,安装 mc后执行

mc config host add minio-oss https://minio.domain.vc admin 123456 S3v4

mc mb minio-oss/thumbor-storage

注意例子中的 TC_AWS_LOADER_BUCKET 必须指定,该 docker 镜像有问题并不支持在访问URL中写入Bucket的方式,我按网上方案并没有解决

nginx 说明

对 Thumbor url 语法进行改进,去掉 unsafe,加入自定的尾部截取语法

server {

listen 80 default;

server_name localhost;

# This CORS configuration will be deleted if envvar THUMBOR_ALLOW_CORS != true

add_header 'Access-Control-Allow-Origin' '*'; # THUMBOR_ALLOW_CORS

add_header 'Access-Control-Allow-Credentials' 'true'; # THUMBOR_ALLOW_CORS

add_header 'Access-Control-Allow-Methods' 'GET, POST, PUT, DELETE, OPTIONS'; # THUMBOR_ALLOW_CORS

add_header 'Access-Control-Allow-Headers' 'Accept,Authorization,Cache-Control,Content-Type,DNT,If-Modified-Since,Keep-Alive,Origin,User-Agent,X-Mx-ReqToken,X-Requested-With'; # THUMBOR_ALLOW_CORS

location / {

proxy_pass http://thumbor/unsafe$request_uri;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location ~* ^([\s\S]+)_([\d-]+x[\d-]+)$ {

proxy_pass http://thumbor;

rewrite ~*/([\s\S]+)_([\d-]+x[\d-]+)$ /unsafe/$2/$1 break;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location = /healthcheck {

proxy_pass http://thumbor$request_uri;

access_log off;

}

location ~ /\.ht { deny all; access_log off; error_log off; }

location ~ /\.hg { deny all; access_log off; error_log off; }

location ~ /\.svn { deny all; access_log off; error_log off; }

location = /favicon.ico { deny all; access_log off; error_log off; }

}

使用

如 bucket test-images 中有图片 test1.jpg,进行裁剪

官方方式

http://localhost:8001/200x200/test1.jpg

自定的尾部截取语法

http://localhost:8001/test1.jpg_200x200

其它相关 Thumbor 语法去官网查询

k8s 安装

写了一个 helm chart https://github.com/jamesDeng/helm-charts/tree/master/thumbor,配制 value.yaml

# Default values for thumbor.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

thumbor:

image:

repository: registry.cn-shenzhen.aliyuncs.com/thinker-open/thumbor

tag: 6.6.0

pullPolicy: Always

resources:

limits:

cpu: 1000m

memory: 1024Mi

requests:

cpu: 500m

memory: 128Mi

nginx:

image:

repository: registry.cn-shenzhen.aliyuncs.com/thinker-open/thumbor-nginx

tag: 1.0.1

pullPolicy: Always

resources:

limits:

cpu: 500m

memory: 256Mi

requests:

cpu: 100m

memory: 128Mi

service:

type: ClusterIP

port: 80

ingress:

enabled: true

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

path: /

hosts:

- image.domain.vc

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

nodeSelector: {}

thumborConfig:

THUMBOR_PORT: 8000

ALLOW_UNSAFE_URL: "True"

LOG_LEVEL: "DEBUG"

DETECTORS: "['thumbor.detectors.feature_detector','thumbor.detectors.face_detector']"

AWS_ACCESS_KEY_ID: admin

AWS_SECRET_ACCESS_KEY: 123456

# aws:eu-central-1 minio:us-east-1

TC_AWS_REGION: us-east-1

TC_AWS_ENDPOINT: https://minio.domain.vc

TC_AWS_ENABLE_HTTP_LOADER: "False"

LOADER: "tc_aws.loaders.s3_loader"

TC_AWS_LOADER_BUCKET: test-images

TC_AWS_LOADER_ROOT_PATH: ""

STORAGE: "tc_aws.storages.s3_storage"

TC_AWS_STORAGE_BUCKET: thumbor-storage

TC_AWS_STORAGE_ROOT_PATH: storage

RESULT_STORAGE: "tc_aws.result_storages.s3_storage"

TC_AWS_RESULT_STORAGE_BUCKET: thumbor-storage

TC_AWS_RESULT_STORAGE_ROOT_PATH: result_storage

RESULT_STORAGE_STORES_UNSAFE: "True"

STORAGE_EXPIRATION_SECONDS: None

RESULT_STORAGE_EXPIRATION_SECONDS: None

clone 下来进入thumbor目录执行

helm install --name thumbor -f values.yaml .

部署成功使用 http://image.domain.vc/200x200/test1.jpg 访问

参考文档

K8s cert manager

cert-manager 是k8s的本地证书管理控制器,它可以自动注册和续签 Let’s Encrypt, HashiCorp Vault 的https证书

安装

使用 helm 安装,这里可以查找charts repository

helm install \

--name cert-manager \

--namespace kube-system \

stable/cert-manager

有墙的问题,建议把源码下载后安装

git clone https://github.com/helm/charts.git

cd charts/stable/cert-manager

helm install \

--name cert-manager \

--namespace kube-system \

./

使用

设置 Issuer

先添加用于设定从那里取得证书的 Issuer,k8s 提供了Issuer和ClusterIssuer两种,前者在单个namespace使用,后者是可以在整个集里中使用的

cat>issuer.yaml<<EOF

apiVersion: certmanager.k8s.io/v1alpha1

# Issuer/ClusterIssuer

kind: Issuer

metadata:

name: cert-issuer

namespace: default

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# 用于AGME的注册

email: my@163.com

# 用于存储向AGME注册的 private key

privateKeySecretRef:

name: cert-issuer-key

# 启用 http-01 challenge

http01: {}

EOF

kubectl apply -f issuer.yaml

如果要设定 ClusterIssuer 把yaml中的kind设置成ClusterIssuer即可

添加 secret

生成签名密钥并创建 k8s secret

# Generate a CA private key

$ openssl genrsa -out ca.key 2048

# Create a self signed Certificate, valid for 10yrs with the 'signing' option set

$ openssl req -x509 -new -nodes -key ca.key -subj "/CN=kube-ingress" -days 3650 -reqexts v3_req -extensions v3_ca -out ca.crt

$ kubectl create secret tls my-tls \

--cert=ca.crt \

--key=ca.key \

--namespace=default

取得 Certificate

cat>my-cert.yaml<<EOF

apiVersion: certmanager.k8s.io/v1alpha1

kind: Certificate

metadata:

name: my-cert

namespace: default

spec:

secretName: my-tls

issuerRef:

name: cert-issuer

## 如果使用 ClusterIssuer 需要添加 kind: ClusterIssuer

#kind: ClusterIssuer

commonName: ""

dnsNames:

- www.example.com

acme:

config:

- http01:

ingress: ""

domains:

- www.example.com

EOF

#创建

kubectl apply -f my-cert.yaml

#查看进度

kubectl describe certificate my-cert -n default

参考文档

Gitlab ci:cd

在devops开发流程中,ci/cd是很非常重要的步骤,它赋予了我们快速迭代的可能:

- ci(持续构建) 代码提交后触发自动化的单元测试,代码预编译,构建镜像,上传镜像等.

- cd(持续发布) 持续发布则指将构建好的程序发布到各种环境,如预发布环境,正式环境.

GitLab CI/CD 通过在项目内 .gitlab-ci.yaml 配置文件读取 CI 任务并进行相应处理;GitLab CI 通过其称为 GitLab Runner 的 Agent 端进行 build 操作,结构如下:

GitLab Runner

gitlab 的CI/CD任务需要GitLab Runner运维,Runner可以是虚拟机、VPS、裸机、docker容器、甚至一堆容器。GitLab和Runners通过API通信,所以唯一的要求就是运行Runners的机器可以联网,,参考官方文档进行安装

#机器为 CentOS 7.4

curl -L https://packages.gitlab.com/install/repositories/runner/gitlab-runner/script.rpm.sh | sudo bash

yum install gitlab-runner

gitlab-runner status

Runner 通过注册的方式添加需要服务的Project、Group或者整个gitlab,在Project/Group->CI/CD->Runners settings查找需要的registration token

执行官方文档的设置步骤

执行官方文档的设置步骤

$ gitlab-runner register

Running in system-mode.

Please enter the gitlab-ci coordinator URL (e.g. https://gitlab.com/):

# 输入 URL http://gitlab.domain.com/

Please enter the gitlab-ci token for this runner:

#输入 registration token

Please enter the gitlab-ci description for this runner:

#输入描述

Please enter the gitlab-ci tags for this runner (comma separated):

#输入tags

Registering runner... succeeded runner=9jHBE4P6

Please enter the executor: ssh, virtualbox, docker+machine, docker-ssh+machine, docker, docker-ssh, parallels, shell, kubernetes:

#输入执行类型,我输入的是 docker

Please enter the default Docker image (e.g. ruby:2.1):

#默认运行的镜像

Runner registered successfully. Feel free to start it, but if it's running already the config should be automatically reloaded!

#查看注册

$ gitlab-runner list

在 Project/Group->CI/CD->Runners settings 查看是否可以使用

.gitlab-ci.yml

.gitlab-ci.yml是用来配置CI在我们的项目中做些什么工作,它位于项目的根目录。

语法参考官方文档的翻译

我司项目目前有 java,react,android,ios 类型项目,该篇介绍 java

java build

项目使用maven构建,发布需要打包成docker images,采用dockerfile-maven-plugin方式做 docker images bulid and push

image: maven:3.5.4-jdk-8

# 注意使用 docker:dind 需要设置 /etc/gitlab-runner/config.toml 的 privileged = true,

services:

- docker:dind

variables:

# This will supress any download for dependencies and plugins or upload messages which would clutter the console log.

# `showDateTime` will show the passed time in milliseconds. You need to specify `--batch-mode` to make this work.

MAVEN_OPTS: "-Dmaven.repo.local=.m2/repository -Dorg.slf4j.simpleLogger.log.org.apache.maven.cli.transfer.Slf4jMavenTransferListener=WARN -Dorg.slf4j.simpleLogger.showDateTime=true -Djava.awt.headless=true"

# As of Maven 3.3.0 instead of this you may define these options in `.mvn/maven.config` so the same config is used

# when running from the command line.

# `installAtEnd` and `deployAtEnd` are only effective with recent version of the corresponding plugins.

MAVEN_CLI_OPTS: "--batch-mode --errors --fail-at-end --show-version -DinstallAtEnd=true -DdeployAtEnd=true"

#用于支持 docker bulid

DOCKER_HOST: tcp://docker:2375

DOCKER_DRIVER: overlay2

# Cache downloaded dependencies and plugins between builds.

# To keep cache across branches add 'key: "$CI_JOB_NAME"'

cache:

paths:

- .m2/repository

stages:

- build

- deploy

# Validate JDK8

validate:jdk8:

script:

- 'mvn $MAVEN_CLI_OPTS test-compile'

only:

- dev-4.0.1

stage: build

# 目前只做到打包

deploy:jdk8:

# Use stage test here, so the pages job may later pickup the created site.

stage: deploy

script:

- 'mvn $MAVEN_CLI_OPTS deploy'

only:

- dev-4.0.1

注意使用docker:dind 需要在 docker run 加入 –privileged,Runner 设置方式为修改 /etc/gitlab-runner/config.toml 的 privileged = true

concurrent = 1

check_interval = 0

[[runners]]

name = "kms"

url = "http://example.org/ci"

token = "3234234234234"

executor = "docker"

[runners.docker]

tls_verify = false

image = "alpine:3.4"

privileged = true

disable_cache = false

volumes = ["/cache"]

[runners.cache]

Insecure = false

dockerfile-maven-plugin push 支持

dockerfile-maven-plugin 的 push 需要在 maven settings.xml 文件中设置 docker 的用户名和密码,这里需要重做 maven images ,为了安全还需要对 settings.xml 中的密码进行加密,并且 dockerfile-maven-plugin 高于 1.4.3 才支持这个设置

进行 settings-security.xml settings.xml 文件准备

#生成 master 密码

$ mvn --encrypt-master-password 123456

{40hwd7X9T1wHfRTKWlUIbHyjacbV4iV/hSfODlRcH/E=}

$ cat>settings-security.xml<<EOF

<settingsSecurity>

<master>{40hwd7X9T1wHfRTKWlUIbHyjacbV4iV/hSfODlRcH/E=}</master>

</settingsSecurity>

EOF

#生成 docker 密码

$ mvn --encrypt-password 123456

{QdP5NFvxYhYHILE6k8tDEff+CZzWq2N3mCkPPUcljSA=}

#修改 settings.xml 中 docker 密码

<server>

<id>registry.cn-shenzhen.aliyuncs.com</id>

<username>username</username>

<password>{QdP5NFvxYhYHILE6k8tDEff+CZzWq2N3mCkPPUcljSA=}</password>

</server>

完成 dockerfile

FROM maven:3.5.4-jdk-8

COPY settings.xml /root/.m2/

COPY settings-security.xml /root/.m2/

docker build 成自己的 maven images,并修改 .gitlab-ci.yml 文件中使用的 images

私有 docker images 支持

如果 docker images 是需要login的,先生成 username:password 的 base64 值

echo -n "my_username:my_password" | base64

# Example output to copy

bXlfdXNlcm5hbWU6bXlfcGFzc3dvcmQ=

在 Project/Group->CI/CD->Variable添加 DOCKER_AUTH_CONFIG,内容如下:

{

"auths": {

"registry.example.com": {

"auth": "bXlfdXNlcm5hbWU6bXlfcGFzc3dvcmQ="

}

}

}

k8s helm deploy

公司项目基于 k8s helm 进行发布,这里介绍怎样基于 k8s helm 做 CD

首先需要做一个 kubectl and helm 的镜像

FROM alpine:3.4

#注意 kubectl 和 helm 的版本

ENV KUBE_LATEST_VERSION=v1.10.7

ENV HELM_VERSION=v2.9.1

ENV HELM_FILENAME=helm-${HELM_VERSION}-linux-amd64.tar.gz

RUN apk add --update ca-certificates \

&& apk add --update -t deps curl \

&& apk add --update gettext tar gzip \

&& curl -L https://storage.googleapis.com/kubernetes-release/release/${KUBE_LATEST_VERSION}/bin/linux/amd64/kubectl -o /usr/local/bin/kubectl \

&& curl -L https://storage.googleapis.com/kubernetes-helm/${HELM_FILENAME} | tar xz && mv linux-amd64/helm /bin/helm && rm -rf linux-amd64 \

&& chmod +x /usr/local/bin/kubectl \

&& apk del --purge deps \

&& rm /var/cache/apk/*

运行 build 需要使用代理解决墙的问题

#HTTP_PROXY=http://192.168.1.22:1087 为 vpn 代理

docker build --build-arg HTTP_PROXY=http://192.168.1.22:1087 -t helm-kubectl:v2.9.1-1.10.7 .

kubectl 执行指令需要 k8s api 的config,找一台 k8s master 执行指令将 config 转换成 base64,创建 gitlab ci/cd 的 “kube_config” variable 值为 config base64

cat ~/.kube/config | base64

加入 deploy-test job 用于发布测试环境,考虑目前开发模式下测试环境不会更新版本号,在 deploy.yaml 的 spec/template/metadata/annotations 下添加 update/version 注解,并在 chart 配制 values

spec:

selector:

matchLabels:

app: admin

replicas: 1

revisionHistoryLimit: 3

template:

metadata:

labels:

app: admin

annotations:

update/version : ""

在 job 使用 helm upgrade –set admin.updateVersion= 可以达到重新部署测试环境的目的

deploy-test:

# Use stage test here, so the pages job may later pickup the created site.

stage: deploy-test

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/helm-kubectl:v2.9.1-1.10.7

before_script:

- mkdir -p /etc/deploy

- echo ${kube_config} | base64 -d > ${KUBECONFIG}

- kubectl config use-context kubernetes-admin@kubernetes

- helm init --client-only --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

- helm repo add my-repo http://helm.domain.com/

- helm repo update

script:

- helm upgrade cabbage-test --reuse-values --set admin.updateVersion=`date +%s` thinker/cabbage

environment:

name: test

url: https://test.example.com

only:

- dev-4.0.1

加入 deploy-prod job 用于发布生产环境,拉 tag 时执行,使用 tag name 更新 image 的版本

deploy-prod:

# Use stage test here, so the pages job may later pickup the created site.

stage: deploy-prod

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/helm-kubectl:v2.9.1-1.10.7

before_script:

- mkdir -p /etc/deploy

#使用 kube_config variable

- echo ${kube_config} | base64 -d > /etc/deploy/config

#注意 kubernetesContextName 为 ~/.kube/config 文件中的 context name

- kubectl config use-context kubernetesContextName

#墙问题使用阿里云源

- helm init --client-only --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

#加入需要的 helm repo

- helm repo add my-repo http://helm.domain.com/

- helm repo update

script:

# 这里只做 upgrade,CI_COMMIT_REF_NAME 是 branch or tag name

- helm upgrade cabbage-test --reuse-values --set admin.image.tag=${CI_COMMIT_REF_NAME} thinker/cabbage

environment:

name: test

url: https://prod.example.com

only:

- tags

完整例子

image: maven:3.5.4-jdk-8

services:

- docker:dind

variables:

MAVEN_OPTS: "-Dmaven.repo.local=.m2/repository -Dorg.slf4j.simpleLogger.log.org.apache.maven.cli.transfer.Slf4jMavenTransferListener=WARN -Dorg.slf4j.simpleLogger.showDateTime=true -Djava.awt.headless=true"

MAVEN_CLI_OPTS: "--batch-mode --errors --fail-at-end --show-version -DinstallAtEnd=true -DdeployAtEnd=true"

DOCKER_HOST: tcp://docker:2375

DOCKER_DRIVER: overlay2

KUBECONFIG: /etc/deploy/config

# Cache downloaded dependencies and plugins between builds.

# To keep cache across branches add 'key: "$CI_JOB_NAME"'

cache:

paths:

- .m2/repository

stages:

- build

- deploy-test

- deploy-prod

# mvn deploy,生成 images 并 push 到阿里云

build:jdk8:

script:

- 'mvn $MAVEN_CLI_OPTS deploy'

only:

- dev-4.0.1

stage: build

deploy-test:

# Use stage test here, so the pages job may later pickup the created site.

stage: deploy-test

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/helm-kubectl:v2.9.1-1.10.7

before_script:

- mkdir -p /etc/deploy

- echo ${kube_config} | base64 -d > ${KUBECONFIG}

- kubectl config use-context kubernetes-admin@kubernetes

- helm init --client-only --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

- helm repo add my-repo http://helm.domain.com/

- helm repo update

script:

- helm upgrade cabbage-test --reuse-values --set admin.updateVersion=`date +%s` thinker/cabbage

environment:

name: test

url: https://test.example.com

only:

- dev-4.0.1

deploy-prod:

# Use stage test here, so the pages job may later pickup the created site.

stage: deploy-prod

image: registry.cn-shenzhen.aliyuncs.com/thinker-open/helm-kubectl:v2.9.1-1.10.7

before_script:

- mkdir -p /etc/deploy

- echo ${kube_config} | base64 -d > ${KUBECONFIG}

- kubectl config use-context kubernetes-admin@kubernetes

- helm init --client-only --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

- helm repo add my-repo http://helm.domain.com/

- helm repo update

script:

#CI_COMMIT_REF_NAME 是 branch or tag name

- helm upgrade cabbage-test --reuse-values --set admin.image.tag=${CI_COMMIT_REF_NAME} thinker/cabbage

environment:

name: test

url: https://prod.example.com

only:

- tags

总结

采用 helm 有几个问题:

- 项目第一次 install 应该由运维统一执行,如果放在 job 里做怎样处理配制文件是个问题

- 如果迭代过程中出现在配制文件项的添加和更新要怎么处理

- helm chart 文件的编写与项目开发怎么协调

参考文档

K8s日志处理

k8s集群的日志方案

Kubernetes 没有为日志数据提供原生存储方案,需要集成现有的日志解决方案到 Kubernetes 集群,首先要介绍一下官方文档对日志架构说明:

节点级日志

容器化应用写入 stdout 和 stderr 的任何数据,都会被容器引擎捕获并被重定向到某个位置。例如,Docker 容器引擎将这两个输出流重定向到 日志驱动 ,该日志驱动在 Kubernetes 中配置为以 json 格式写入文件。

集群级别日志架构

官方文档推荐以下选项:

- 使用运行在每个节点上的节点级的日志代理。

- 在应用程序的 pod 中,包含专门记录日志的伴生容器。

- 在应用程序中将日志直接推送到后台。

使用节点级日志代理

您可以在每个节点上使用 节点级的日志代理 来实现集群级日志记录。日志代理是专门的工具,它会暴露出日志或将日志推送到后台。通常来说,日志代理是一个容器,这个容器可以访问这个节点上所有应用容器的日志目录。

因为日志代理必须在每个节点上运行,所以通常的实现方式为,DaemonSet 副本,manifest pod,或者专用于本地的进程。但是后两种方式已被弃用并且不被推荐。

使用伴生容器和日志代理

您可以通过以下方式之一使用伴生容器:

- 伴生容器将应用程序日志传送到自己的标准输出。

- 伴生容器运行一个日志代理,配置该日志代理以便从应用容器收集日志。

从应用中直接暴露日志目录

通过暴露或推送每个应用的日志,您可以实现集群级日志记录;然而,这种日志记录机制的实现已超出 Kubernetes 的范围。

实践

根据我司集群规模用到节点级日志代理就够了,这里使用官方推荐 EFK(Elasticsearch+Fluentd+Kibana) 解决方案,过程中尝试了Fluentd后觉得并不是特别好用(文档、资料、社区活跃都不够),最终选择用 elastic 家族的 Filebeat 替换掉 Fluentd。

目前方案为:

- Filebeat

- Elasticsearch

- Kibana

安装

Elasticsearch:

wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.10/cluster/addons/fluentd-elasticsearch/es-service.yaml

kubectl apply -f es-service.yaml

wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.10/cluster/addons/fluentd-elasticsearch/es-statefulset.yaml

#修改images为国内,原数据存储方式为emptyDir,修改为网盘

# Elasticsearch deployment itself

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v5.6.4

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

serviceName: elasticsearch-logging

replicas: 2

selector:

matchLabels:

k8s-app: elasticsearch-logging

version: v5.6.4

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v5.6.4

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: elasticsearch-logging

containers:

- image: registry.cn-hangzhou.aliyuncs.com/google_containers/elasticsearch:v5.6.4 #国内阿里云镜像

name: elasticsearch-logging

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: elasticsearch-logging

mountPath: /data

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

initContainers:

- image: alpine:3.6

command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]

name: elasticsearch-logging-init

securityContext:

privileged: true

volumeClaimTemplates: #阿里云盘存储

- metadata:

name: "elasticsearch-logging"

spec:

accessModes:

- ReadWriteOnce

storageClassName: alicloud-disk-efficiency

resources:

requests:

storage: 20Gi

kubectl apply -f es-statefulset.yaml

Filebeat:

wget https://raw.githubusercontent.com/elastic/beats/6.4/deploy/kubernetes/filebeat-kubernetes.yaml

#由于我们安装的es没有权限,清理掉es用户名和密码相关

ConfigMap

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME} # 删除

password: ${ELASTICSEARCH_PASSWORD} # 删除

DaemonSet

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME # 删除

value: elastic

- name: ELASTICSEARCH_PASSWORD # 删除

value: changeme

- name: ELASTIC_CLOUD_ID

value:

- name: ELASTIC_CLOUD_AUTH

value:

kubectl apply -f filebeat-kubernetes.yaml

Kibnan:

wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.10/cluster/addons/fluentd-elasticsearch/kibana-service.yaml

kubectl apply -f kibana-service.yaml

#添加kibana的ingress

cat>kibana-ingress.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana-logging

namespace: kube-system

spec:

rules:

- host: kibana.domain.com

http:

paths:

- backend:

serviceName: kibana-logging

servicePort: 5601

EOF

kubectl apply -f kibana-ingress.yaml

wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.10/cluster/addons/fluentd-elasticsearch/kibana-deployment.yaml

#修改 SERVER_BASEPATH 为 ""( 原本是一串地址不知道有什么用,如不修改为“”或者删除,域名是没有办法正常访问的)

- name: SERVER_BASEPATH

value: /api/v1/namespaces/kube-system/services/kibana-logging/proxy #修改为 ""

kubectl apply -f kibana-deployment.yaml

使用

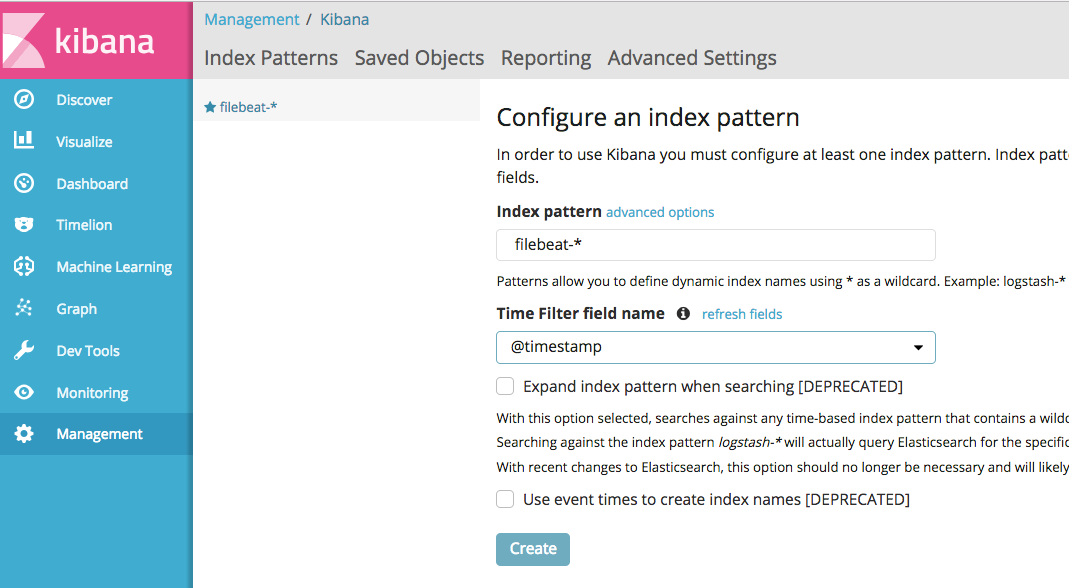

安装都完成后访问 kibana.domain.com 查看 kibana,添加 filebeat-* 的 index

访问权限

kibana 目前还是裸奔状态,这样肯定不能在生产环境使用,尝试通过 elastic 的 X-Pack 方式解决并未成功,主要还是官方的Elasticsearch安装文件未对X-Pack支持,而我许久没玩 Elasticsearch 感觉功力不够,最终选择通过为Ingress添加basic-auth认证的方式做权限

安装 htpasswd,创建用户密码

#安装

yum -y install httpd-tools

#产生密码文件

htpasswd -c auth kibana

New password:

Re-type new password:

Adding password for user kibana

创建secret存储密码

kubectl -n <namespace> create secret generic kibana-basic-auth --from-file=auth

修改 Kibnan Ingress

cat>kibana-ingress.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana-logging

namespace: kube-system

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: kibana-basic-auth

nginx.ingress.kubernetes.io/auth-realm: "Authentication Required - kibana"

spec:

rules:

- host: kibana.domain.com

http:

paths:

- backend:

serviceName: kibana-logging

servicePort: 5601

EOF

kubectl apply -f kibana-ingress.yaml

再访问 kibana.domain.com 就会要求输入用户名和密码拉~~~~

参考文档

K8s监控 prometheus Operator

Prometheus Operator 介绍、安装、使用

介绍

Prometheus 为k8s 1.11推荐的集群监控和警报平台,Operator是CoreOS开源的一套用于维护Kubernetes上Prometheus的控制器,目标是简化部署与维护Prometheus。

架构如下:

主要分为五个部分:

- Operate: 系统主要控制器,根据自定义的资源(Custom Resource Definition,CRDs)来负责管理与部署;

- Prometheus Server: 由Operator 依据一个自定义资源Prometheus类型中所描述的内容而部署的Prometheus Server集,可以将这个自定义资源看作是一种特别用来管理Prometheus Server的StatefulSet资源;

- ServiceMonitor: 一个Kubernetes自定义资料,该资源描述了Prometheus Server的Target列表,Operator会监听这个资源的变化来动态更新 Prometheus Server的Scrape targets。而该资源主要透过 Selector 来依据 Labels 选取对应的 Service Endpoint,并让 Prometheus Serve 透过 Service 进行拉取 Metrics 资料;

- Service: kubernetes 中的 Service 资源,这边主要用来对应 Kubernetes 中 Metrics Server Pod,然后提供给 ServiceMonitor 选取让 Prometheus Server 拉取资料,在 Prometheus 术语中可以称为 Target,即被 Prometheus 监测的对象,如一個部署在 Kubernetes 上的 Node Exporter Service。

- Alertmanager: 接收从 Prometheus 来的 event,再根据定义的 notification 组决定要通知的方法。

安装

采用 helm chart 方式安装

helm repo add coreos https://s3-eu-west-1.amazonaws.com/coreos-charts/stable/

helm install coreos/prometheus-operator --name prometheus-operator --namespace monitoring

#设置安装 CoreDNS

cat>kube-prometheus-values.yaml<<EOF

# Select Deployed DNS Solution

deployCoreDNS: true

deployKubeDNS: false

deployKubeEtcd: true

EOF

helm install coreos/kube-prometheus --name kube-prometheus --namespace monitoring -f kube-prometheus-values.yaml

# 采用域名方式访问,添加 grafana 的 ingress

cat>grafana-ingress.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kube-prometheus-grafana

namespace: monitoring

spec:

rules:

- host: grafana.domain.com

http:

paths:

- path: /

backend:

serviceName: kube-prometheus-grafana

servicePort: 80

EOF

kubectl apply -f grafana-ingress.yaml

#deployments kube-prometheus-exporter-kube-state 的images无法下载,改为国内镜像

#将 images 地址前缀为 registry.cn-hangzhou.aliyuncs.com/google_containers

kubectl edit deploy kube-prometheus-exporter-kube-state -n monitoring

#关闭 grafana 匿名身份验证

kubectl edit deploy kube-prometheus-grafana -n monitoring

#将env GF_AUTH_ANONYMOUS_ENABLED改到false

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

使用

访问 grafana.domain.com,用户密码都为 admin

参考文档

K8s 1.11 阿里云安装

阿里云安装k8s 1.11.2 HA版本

架构说明

由于阿里云Ecs无法安装keepalived,我们采用阿里内部loadbalancer做master的负载,阿里内部loadbalancer无法由vpc内部ecs负载到自己机器,所以还需要另外两台服务器安装haproxy负载到三台master

cn-shenzhen.i-wz9jeltjohlnyf56uco2 : etcd master haproxy 172.16.0.196

cn-shenzhen.i-wz9jeltjohlnyf56uco4 : etcd master haproxy 172.16.0.197

cn-shenzhen.i-wz9jeltjohlnyf56uco1 : etcd master haproxy 172.16.0.198

cn-shenzhen.i-wz9jeltjohlnyf56uco3 : node 172.16.0.199

cn-shenzhen.i-wz9jeltjohlnyf56uco0 : node 172.16.0.199

(loadblancer ip): 172.16.0.201

准备工作

- 修改ECS节点的名称为regionId.instanceId,这个名称阿里云的相关插件都需要使用

- master节点做ssh打通

- 配置hosts解析

#master , k8s-master-lb 指向本机通过 haproxy 负载 cat >>/etc/hosts<<EOF 172.16.0.196 cn-shenzhen.i-wz9jeltjohlnyf56uco2 172.16.0.197 cn-shenzhen.i-wz9jeltjohlnyf56uco4 172.16.0.198 cn-shenzhen.i-wz9jeltjohlnyf56uco1 127.0.0.1 k8s-master-lb EOF #node,k8s-master-lb 指向 loadblancer ip cat >>/etc/hosts<<EOF 172.16.0.196 cn-shenzhen.i-wz9jeltjohlnyf56uco2 172.16.0.197 cn-shenzhen.i-wz9jeltjohlnyf56uco4 172.16.0.198 cn-shenzhen.i-wz9jeltjohlnyf56uco1 172.16.0.201 k8s-master-lb EOF

安装docker

#卸载安装指定版本docker-ce

yum remove -y docker-ce docker-ce-selinux container-selinux

#配制docker源

sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install -y --setopt=obsoletes=0 \

docker-ce-17.03.1.ce-1.el7.centos \

docker-ce-selinux-17.03.1.ce-1.el7.centos

#配制阿里云docker加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://8dxol81m.mirror.aliyuncs.com"]

}

EOF

sed -i '$a net.bridge.bridge-nf-call-iptables = 1' /usr/lib/sysctl.d/00-system.conf

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

#开启forward

#Docker从1.13版本开始调整了默认的防火墙规则

#禁用了iptables filter表中FOWARD链

#这样会引起Kubernetes集群中跨Node的Pod无法通信

iptables -P FORWARD ACCEPT

sed -i "/ExecStart=/a\ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /lib/systemd/system/docker.service

systemctl daemon-reload

systemctl enable docker

systemctl restart docker

配制haproxy

所有master都安装haproxy

#拉取haproxy镜像

docker pull haproxy:1.7.8-alpine

mkdir /etc/haproxy

cat >/etc/haproxy/haproxy.cfg<<EOF

global

log 127.0.0.1 local0 err

maxconn 50000

uid 99

gid 99

#daemon

nbproc 1

pidfile haproxy.pid

defaults

mode http

log 127.0.0.1 local0 err

maxconn 50000

retries 3

timeout connect 5s

timeout client 30s

timeout server 30s

timeout check 2s

listen admin_stats

mode http

bind 0.0.0.0:1080

log 127.0.0.1 local0 err

stats refresh 30s

stats uri /haproxy-status

stats realm Haproxy\ Statistics

stats auth will:will

stats hide-version

stats admin if TRUE

frontend k8s-https

bind 0.0.0.0:8443

mode tcp

#maxconn 50000

default_backend k8s-https

backend k8s-https

mode tcp

balance roundrobin

server lab1 172.16.0.196:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

server lab2 172.16.0.196:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

server lab3 172.16.0.196:6443 weight 1 maxconn 1000 check inter 2000 rise 2 fall 3

EOF

#启动haproxy

docker run -d --name my-haproxy \

-v /etc/haproxy:/usr/local/etc/haproxy:ro \

-p 8443:8443 \

-p 1080:1080 \

--restart always \

haproxy:1.7.8-alpine

#查看日志

docker logs my-haproxy

安装 kubeadm, kubelet 和 kubectl

#配置源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装

yum install -y kubelet kubeadm kubectl ipvsadm

配置系统相关参数

#临时禁用selinux

#永久关闭 修改/etc/sysconfig/selinux文件设置

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/sysconfig/selinux

sed -i "s/^SELINUX=permissive/SELINUX=disabled/g" /etc/selinux/config

#临时关闭swap

#永久关闭 注释/etc/fstab文件里swap相关的行

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

systemctl stop firewalld

systemctl disable firewalld

# 配置转发相关参数,否则可能会出错

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

sysctl --system

#加载ipvs相关内核模块

#如果重新开机,需要重新加载

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

lsmod | grep ip_vs

配置启动kubelet

#配置kubelet使用国内pause镜像

#配置kubelet的cgroups

#获取docker的cgroups

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f3)

echo $DOCKER_CGROUPS

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

#启动

systemctl daemon-reload

systemctl enable kubelet && systemctl restart kubelet

配置第一个master节点

#生成配置文件

CP0_IP="172.16.0.196"

CP0_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco2"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "cn-shenzhen.i-wz9jeltjohlnyf56uco2"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco4"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco1"

- "172.16.0.196"

- "172.16.0.197"

- "172.16.0.198"

- "172.16.0.201"

- "127.0.0.1"

- "k8s-master-lb"

api:

advertiseAddress: $CP0_IP

controlPlaneEndpoint: k8s-master-lb:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP0_IP:2379"

advertise-client-urls: "https://$CP0_IP:2379"

listen-peer-urls: "https://$CP0_IP:2380"

initial-advertise-peer-urls: "https://$CP0_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380"

serverCertSANs:

- $CP0_HOSTNAME

- $CP0_IP

peerCertSANs:

- $CP0_HOSTNAME

- $CP0_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

#提前拉取镜像

#如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

#初始化

#注意保存返回的 join 命令

kubeadm init --config kubeadm-master.config

#打包ca相关文件上传至其他master节点

cd /etc/kubernetes && tar cvzf k8s-key.tgz admin.conf pki/ca.* pki/sa.* pki/front-proxy-ca.* pki/etcd/ca.*

scp k8s-key.tgz cn-shenzhen.i-wz9jeltjohlnyf56uco4:~/

scp k8s-key.tgz cn-shenzhen.i-wz9jeltjohlnyf56uco1:~/

ssh cn-shenzhen.i-wz9jeltjohlnyf56uco4 'tar xf k8s-key.tgz -C /etc/kubernetes/'

ssh cn-shenzhen.i-wz9jeltjohlnyf56uco1 'tar xf k8s-key.tgz -C /etc/kubernetes/'

配制第二个master

#生成配置文件

CP0_IP="172.16.0.196"

CP0_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco2"

CP1_IP="172.16.0.197"

CP1_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco4"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "cn-shenzhen.i-wz9jeltjohlnyf56uco2"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco4"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco1"

- "172.16.0.196"

- "172.16.0.197"

- "172.16.0.198"

- "172.16.0.201"

- "127.0.0.1"

- "k8s-master-lb"

api:

advertiseAddress: $CP0_IP

controlPlaneEndpoint: k8s-master-lb:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP1_IP:2379"

advertise-client-urls: "https://$CP1_IP:2379"

listen-peer-urls: "https://$CP1_IP:2380"

initial-advertise-peer-urls: "https://$CP1_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP1_HOSTNAME

- $CP1_IP

peerCertSANs:

- $CP1_HOSTNAME

- $CP1_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

#配置kubelet

kubeadm alpha phase certs all --config kubeadm-master.config

kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

systemctl restart kubelet

#添加etcd到集群中

CP0_IP="172.16.0.196"

CP0_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco2"

CP1_IP="172.16.0.197"

CP1_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco4"

KUBECONFIG=/etc/kubernetes/admin.conf kubectl exec -n kube-system etcd-${CP0_HOSTNAME} -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://${CP0_IP}:2379 member add ${CP1_HOSTNAME} https://${CP1_IP}:2380

kubeadm alpha phase etcd local --config kubeadm-master.config

#提前拉取镜像

#如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

#部署

kubeadm alpha phase kubeconfig all --config kubeadm-master.config

kubeadm alpha phase controlplane all --config kubeadm-master.config

kubeadm alpha phase mark-master --config kubeadm-master.config

配制第三个master

#生成配置文件

CP0_IP="172.16.0.196"

CP0_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco2"

CP1_IP="172.16.0.197"

CP1_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco4"

CP2_IP="172.16.0.198"

CP2_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco1"

cat >kubeadm-master.config<<EOF

apiVersion: kubeadm.k8s.io/v1alpha2

kind: MasterConfiguration

kubernetesVersion: v1.11.2

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

apiServerCertSANs:

- "cn-shenzhen.i-wz9jeltjohlnyf56uco2"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco4"

- "cn-shenzhen.i-wz9jeltjohlnyf56uco1"

- "172.16.0.196"

- "172.16.0.197"

- "172.16.0.198"

- "172.16.0.201"

- "127.0.0.1"

- "k8s-master-lb"

api:

advertiseAddress: $CP0_IP

controlPlaneEndpoint: k8s-master-lb:8443

etcd:

local:

extraArgs:

listen-client-urls: "https://127.0.0.1:2379,https://$CP2_IP:2379"

advertise-client-urls: "https://$CP2_IP:2379"

listen-peer-urls: "https://$CP2_IP:2380"

initial-advertise-peer-urls: "https://$CP2_IP:2380"

initial-cluster: "$CP0_HOSTNAME=https://$CP0_IP:2380,$CP1_HOSTNAME=https://$CP1_IP:2380,$CP2_HOSTNAME=https://$CP2_IP:2380"

initial-cluster-state: existing

serverCertSANs:

- $CP2_HOSTNAME

- $CP2_IP

peerCertSANs:

- $CP2_HOSTNAME

- $CP2_IP

controllerManagerExtraArgs:

node-monitor-grace-period: 10s

pod-eviction-timeout: 10s

networking:

podSubnet: 10.244.0.0/16

kubeProxy:

config:

mode: ipvs

EOF

#配置kubelet

kubeadm alpha phase certs all --config kubeadm-master.config

kubeadm alpha phase kubelet config write-to-disk --config kubeadm-master.config

kubeadm alpha phase kubelet write-env-file --config kubeadm-master.config

kubeadm alpha phase kubeconfig kubelet --config kubeadm-master.config

systemctl restart kubelet

#添加etcd到集群中

CP0_IP="172.16.0.196"

CP0_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco2"

CP2_IP="172.16.0.198"

CP2_HOSTNAME="cn-shenzhen.i-wz9jeltjohlnyf56uco1"

KUBECONFIG=/etc/kubernetes/admin.conf kubectl exec -n kube-system etcd-${CP0_HOSTNAME} -- etcdctl --ca-file /etc/kubernetes/pki/etcd/ca.crt --cert-file /etc/kubernetes/pki/etcd/peer.crt --key-file /etc/kubernetes/pki/etcd/peer.key --endpoints=https://${CP0_IP}:2379 member add ${CP2_HOSTNAME} https://${CP2_IP}:2380

kubeadm alpha phase etcd local --config kubeadm-master.config

#提前拉取镜像

#如果执行失败 可以多次执行

kubeadm config images pull --config kubeadm-master.config

#部署

kubeadm alpha phase kubeconfig all --config kubeadm-master.config

kubeadm alpha phase controlplane all --config kubeadm-master.config

kubeadm alpha phase mark-master --config kubeadm-master.config

配置使用kubectl

rm -rf $HOME/.kube

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#查看node节点

kubectl get nodes

#只有网络插件也安装配置完成之后,才能会显示为ready状态

#设置master允许部署应用pod,参与工作负载,现在可以部署其他系统组件

#如 dashboard, heapster, efk等

kubectl taint nodes --all node-role.kubernetes.io/master-

配置使用网络插件

wget https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

#修改配置

#此处的ip配置要与上面kubeadm的pod-network一致

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

#修改镜像

image: registry.cn-shanghai.aliyuncs.com/gcr-k8s/flannel:v0.10.0-amd64

#启动

kubectl apply -f kube-flannel.yml

#查看

kubectl get pods --namespace kube-system

kubectl get svc --namespace kube-system

安装 ingress-nginx

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

#修改 default-http-backend 的images

image: registry.cn-shenzhen.aliyuncs.com/common-images/defaultbackend:1.4

kubectl apply -f mandatory.yaml

#添加service开放 30080与30433端口

cat>ingress-nginx-service.yaml<<EOF

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30080

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

nodePort: 30443

selector:

app: ingress-nginx

~

EOF

kubectl apply -f ingress-nginx-service.yaml

在阿里云新建外网loadblancer,配制tcp的80和443中转到30080和30433

安装dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

#修改 images

image: registry.cn-hangzhou.aliyuncs.com/k8sth/kubernetes-dashboard-amd64:v1.8.3

kubectl apply -f kubernetes-dashboard.yaml

#删除老证书

kubectl delete secrets kubernetes-dashboard-certs -n kube-system

#配制dashboard https证书,相关证书可以在阿里云申请免费的

kubectl create secret tls kubernetes-dashboard-certs --key ./214658435890700.key --cert ./214658435890700.pem -n kube-system

#

#创建管理用户

cat >admin-user.yaml<<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

kubectl apply -f admin-user.yaml

#配制ingress

cat >dashboard-ingress.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/secure-backends: "true"

name: dashboard-ingress

namespace: kube-system

spec:

tls:

- hosts:

- k8s.xxxx.xxx

secretName: kubernetes-dashboard-certs

rules:

- host: k8s.xxx.xxx

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

EOF

kubectl apply -f dashboard-ingress.yml

#获取token用于登录

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

安装阿里云相关插件

首先所有机器都必须写入配制文件,accessKeyID和accessKeySecret阿里云可取

cat >/etc/kubernetes/cloud-config<<EOF

{

"global": {

"accessKeyID": "xxx",

"accessKeySecret": "xxxx"

}

}

EOF

存储插件需要修改kubelte的配制文件

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--enable-controller-attach-detach=false --cgroup-driver=cgroupfs --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1"

EOF

systemctl daemon-reload

systemctl restart kubelet

cat > flexvolume.yaml<<EOF

apiVersion: apps/v1 # for versions before 1.8.0 use extensions/v1beta1

kind: DaemonSet

metadata:

name: flexvolume

namespace: kube-system

labels:

k8s-volume: flexvolume

spec:

selector:

matchLabels:

name: acs-flexvolume

template:

metadata:

labels:

name: acs-flexvolume

spec:

hostPID: true

hostNetwork: true

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: acs-flexvolume

image: registry.cn-hangzhou.aliyuncs.com/acs/flexvolume:v1.9.7-42e8198

imagePullPolicy: Always

securityContext:

privileged: true

env:

- name: ACS_DISK

value: "true"

- name: ACS_NAS

value: "true"

- name: ACS_OSS

value: "true"

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: usrdir

mountPath: /host/usr/

- name: etcdir

mountPath: /host/etc/

- name: logdir

mountPath: /var/log/alicloud/

volumes:

- name: usrdir

hostPath:

path: /usr/

- name: etcdir

hostPath:

path: /etc/

- name: logdir

hostPath:

path: /var/log/alicloud/

EOF

kubectl apply -f flexvolume.yaml

cat >disk-provisioner.yaml<<EOF

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: alicloud-disk-common

provisioner: alicloud/disk

parameters:

type: cloud

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: alicloud-disk-efficiency

provisioner: alicloud/disk

parameters:

type: cloud_efficiency

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: alicloud-disk-ssd

provisioner: alicloud/disk

parameters:

type: cloud_ssd

---

kind: StorageClass

apiVersion: storage.k8s.io/v1beta1

metadata:

name: alicloud-disk-available

provisioner: alicloud/disk

parameters:

type: available

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: alicloud-disk-controller-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: alicloud-disk-controller

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: run-alicloud-disk-controller

subjects:

- kind: ServiceAccount

name: alicloud-disk-controller

namespace: kube-system

roleRef:

kind: ClusterRole

name: alicloud-disk-controller-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: alicloud-disk-controller

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: alicloud-disk-controller

spec:

tolerations:

- effect: NoSchedule

operator: Exists

key: node-role.kubernetes.io/master

- effect: NoSchedule

operator: Exists

key: node.cloudprovider.kubernetes.io/uninitialized

nodeSelector:

node-role.kubernetes.io/master: ""

serviceAccount: alicloud-disk-controller

containers:

- name: alicloud-disk-controller

image: registry.cn-hangzhou.aliyuncs.com/acs/alicloud-disk-controller:v1.9.3-ed710ce

volumeMounts:

- name: cloud-config

mountPath: /etc/kubernetes/

- name: logdir

mountPath: /var/log/alicloud/

volumes:

- name: cloud-config

hostPath:

path: /etc/kubernetes/

- name: logdir

hostPath:

path: /var/log/alicloud/

EOF

kubectl apply -f disk-provisioner.yaml

#安装nas盘

cat >nas-controller.yaml<<EOF

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: alicloud-nas

provisioner: alicloud/nas

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: alicloud-nas-controller

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: run-alicloud-nas-controller

subjects:

- kind: ServiceAccount

name: alicloud-nas-controller

namespace: kube-system

roleRef:

kind: ClusterRole

name: alicloud-disk-controller-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: alicloud-nas-controller

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: alicloud-nas-controller

spec:

tolerations:

- effect: NoSchedule

operator: Exists

key: node-role.kubernetes.io/master

- effect: NoSchedule

operator: Exists

key: node.cloudprovider.kubernetes.io/uninitialized

nodeSelector:

node-role.kubernetes.io/master: ""

serviceAccount: alicloud-nas-controller

containers:

- name: alicloud-nas-controller

image: registry.cn-hangzhou.aliyuncs.com/acs/alicloud-nas-controller:v1.8.4

volumeMounts:

- mountPath: /persistentvolumes

name: nfs-client-root

env:

- name: PROVISIONER_NAME

value: alicloud/nas

- name: NFS_SERVER

value: 2b9c84b5cc-vap63.cn-shenzhen.nas.aliyuncs.com

- name: NFS_PATH

value: /

volumes:

- name: nfs-client-root

nfs:

server: 2b9c84b5cc-vap63.cn-shenzhen.nas.aliyuncs.com

path: /

EOF

kubectl apply -f nas-controller.yaml

小技巧

忘记初始master节点时的node节点加入集群命令怎么办

kubeadm token create --print-join-command

参考文档: ———–

Postgres k8s 集群

postgres集群方案

总结一下在k8s集群上部署一套高可用、读写分离的postgres集群的方案,大体上有以下三种方案可以选择:

Patroni

只了解了一下官网说明,大体上应该是一套可以进行自定义模版

Crunchy

将现有的开源框架分别整理成Dokcer镜像并做了配制的自定义,通过这些镜像组合提供各种类型的postgres集群解决方案。

官方推荐解决方案

stolon

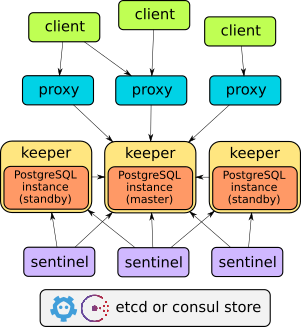

Stolon是专门用于部署在云上的PostgresSql高可用集群,与Kubernetes集成较好。

架构图:

选择

主要在stolon和Crunchy对比:

stolon

- 高可用做的很好,故障转移比较靠谱;

- 并不支持读写分离;

- 存储是在etcd或者consul,未做性能测试不知道效果怎么样;

Cruuchy

- 可以自定义集群的方案,比较灵活;

- 通过pgpool进行读写分离

- 高可用方案依赖于k8s,Master如启动多个Pod需要采用 Access Modes 为 ReadWriteMany 的Volume,支持ReadWriteMany的Volume类型并不多而且多半是网盘性能指标都不高,再者就依赖k8s对pod的故障转移了;

- 其它备份、恢复和管理都提供了相应的组件,可以选择性安装

- 对各类组件都自定义的docker镜像有耦合问题

对比后Cruuchy更加满足我的需求,Master的高可用问题今后我也可以再找更加好的解决方案替换掉Master的部署(或者Cruuchy本身就有解决方案只是我还不会….)

Cruuchy 部署

相关部署都是阿里云的k8s集群上,我的集群部署请参考阿里云 k8s 1.10 版本安装

整个安装做了一个Helm chart,直接使用命令安装

git clone https://github.com/jamesDeng/helm-charts.git

cd pgsql-primary-replica

helm install ./ --name pgsql-primary-replica --set primary.pv.volumeId=xxxx

primary.pv.volumeId 为阿里云盘ID

说明

组成结构还不完整,其它部分还需要慢慢完善,目前为:

- pgpool-II deployment

- primary deployment

- replica statefulset

create the following in your Kubernetes cluster:

- Create a service named primary

- Create a service named replica

- Create a service named pgpool

- Create a deployment named primary

- Create a statefulset named replica

- Create a deployment named pgpool

- Create a persistent volume named primary-pv and a persistent volume claim of primary-pvc

- Create a secret pgprimary-secret

- Create a secret pgpool-secrets

- Create a configMap pgpool-pgconf

自定义配制文件

根目录下:

- pool-configs

- pgpool.conf

- pool_hba.conf

- pool_passwd

- primary-configs

- pg_hba.conf

- postgresql.conf

- setup.sql

如果使用repo的方式安装,由于没有找到helm install替换这些文件的方式,目前有两个解决方案:

- install完成后手动修改相应的configMap和secret的内容

- helm fetch pgsql-primary-replica –untar 下源文件,修改相应的配件文件,采用 helm install ./ 的方式安装

postgres 用户名和密码修改

如果是通过pgpool连接数据库的话,必须在pool_passwd配制postgres的用户名和密码

部署前

可以设置默认用户postgres和PG_USER对应用户的密码

- 修改values.yaml中PG_ROOT_PASSWORD或者PG_PASSWORD的值,

- 使用pgpool的pg_md5 指令生成密码(pg_md5 –md5auth –username= )

- 修改pool-configs/pool_passwd中的用户名和密码

部署后修改

先获取postgres的用户名和md5密码

$ kubectl get pod -n thinker-production

NAME READY STATUS RESTARTS AGE

pgpool-67987c5c67-gjr5h 1/1 Running 0 3d

primary-6f9f488df8-6xjjd 1/1 Running 0 3d

replica-0 1/1 Running 0 3d

# 进入primary pod

$ kubectl exec -it primary-6f9f488df8-6xjjd -n thinker-production sh

$ psql

#执行sql查找md5

$ select usename,passwd from pg_shadow;

usename | passwd

-------------+-------------------------------------

postgres | md5dc5aac68d3de3d518e49660904174d0c

primaryuser | md576dae4ce31558c16fe845e46d66a403c

testuser | md599e8713364988502fa6189781bcf648f

(3 rows)

pool_passwd 的格式为

postgres:md5dc5aac68d3de3d518e49660904174d0c

k8s secret 的values为bash46,转换

$ echo -n 'postgres:md5dc5aac68d3de3d518e49660904174d0c' | base64

cG9zdGdyZXM6bWQ1ZGM1YWFjNjhkM2RlM2Q1MThlNDk2NjA5MDQxNzRkMGM=

编辑 pgpool-secrets 替换 pool_passwd 值

kubectl edit secret pgpool-secrets -n thinker-production

编辑 pgpool deploy,触发滚动更新

kubectl edit deploy pgpool -n thinker-production

# 一般可以修改一下 terminationGracePeriodSeconds 的值

自定义Values.yaml

Values.yaml的值请参考源码

helm install ./ --name pgsql-primary-replica -f values.yaml

参考文档 ————–

服务docker化

运维采用k8s后,相关服务需要做到自动生成Docker images并上传到私服,私服采用的阿里云仓库并配合VPC网络

Java环境说明

- jdk 1.8

- spring-boot 2.0

- maven

- dockerfile-maven 1.3.6

Java image

项目采用Maven构建,使用dockerfile-maven-plugin来做docker images的构建与上传

插件引用

在pom.xml中添加插件引用

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.7</version>

<configuration>

<!-- 排除该工程不deploy 到远程服务器上 -->

<skip>true</skip>

</configuration>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<mainClass>vc.thinker.cabbage.web.AdminServerMain</mainClass>

<layout>JAR</layout>

<executable>true</executable>

</configuration>

<executions>

<execution>

<goals>

<goal>repackage</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>com.spotify</groupId>

<artifactId>dockerfile-maven-plugin</artifactId>

<version>1.3.6</version>

<executions>

<execution>

<id>default</id>

<goals>

<goal>build</goal>

<goal>push</goal>

</goals>

</execution>

</executions>

<configuration>

<!-- 仓库地址 -->

<repository>registry-vpc.cn-shenzhen.aliyuncs.com/xxx/${project.artifactId}</repository>

<!-- docker images tag -->

<tag>${project.version}</tag>

<!-- 采用Maven Settings进行docker仓库授权 -->

<useMavenSettingsForAuth>true</useMavenSettingsForAuth>

<!-- dockerfile ARG,buildArgs可自定义多个配合dockerfile 的ARG指令使用 -->

<buildArgs>

<!-- jar地址 -->

<JAR_FILE>target/${project.build.finalName}.jar</JAR_FILE>

<!-- 配制文件地址 -->

<PROPERTIES>target/classes/${project.build.finalName}/application.properties</PROPERTIES>

<!-- 启动执行命令 -->

<START>target/classes/${project.build.finalName}/k8s-start.sh</START>

</buildArgs>

</configuration>

</plugin>

dockerfile

在项目根目录下添加Dockerfile文件

#built java images,注意本例中没有处理时区问题

FROM openjdk:8-jre

ARG JAR_FILE

ARG PROPERTIES

ARG START

COPY ${JAR_FILE} /JAVA/app.jar

COPY ${PROPERTIES} /JAVA/application.properties

COPY ${START} /JAVA/k8s-start.sh

RUN chmod +x /JAVA/k8s-start.sh && \

ln -s /JAVA/k8s-start.sh /usr/bin/k8s-start

#web 服务端口

EXPOSE 8080

#CMD ["k8s-start"]

ENTRYPOINT ["k8s-start"]

配制文件与执行命令

src/main/resources 下添加 k8s-start.sh 和 application.properties,这里说明一下k8s-start.sh

#!/bin/sh

#采用环境变量的方式修改配制文档,由于环境变量不能有".-"字符,约定".-"统一转换为下划线_

function change(){

echo "準備修改配置項 $1"

local j=`echo "$1"| sed 's/\./_/g'| sed 's/\-/_/g'`

#echo $j

local i=`eval echo '$'{"$j"}`

echo "對應變量為 $j"

[[ ! $i ]] && echo "$j 環境變量不存在,不進行替換!" && return 0

sed -i "s/$1=.*/$1=$i/" /ROOT/application.properties && echo "替換變量 $i 成功 "

}

#修改配制文档,

change spring.datasource.url

change spring.datasource.username

change spring.datasource.password

cd /JAVA

if [[ "$1" == 'sh' || "$1" == 'bash' || "$1" == '/bin/sh' ]]; then

exec "/bin/sh"

fi

#java-conf为jvm参数

echo "配置:java-conf=$java_conf"

if [ "${java_conf}" ]; then

echo "运行java命令:java $java_conf -jar app.jar "

exec su-exec java java $java_conf -jar app.jar

fi

echo "运行java命令:java "$@" -jar app.jar"

exec su-exec java java "$@" -jar app.jar

docker仓库权限

在maven settings.xml设置仓库地址与权限

<configuration>

<repository>docker-repo.example.com:8080/organization/image</repository>

<tag>latest</tag>

<useMavenSettingsForAuth>true</useMavenSettingsForAuth>

</configuration>

权限

<servers>

<server>

<id>docker-repo.example.com:8080</id>

<username>me</username>

<password>mypassword</password>

</server>

</servers>

使用说明

本例中直接执行 mvn deploy 就可以做到docker images的构建和上传

mvn deploy

本地运行

docker run --name my-server -p 8080:8080 \

-e spring_datasource_username="test"

-d images:tag \

-e spring_datasource_username=”test” 为修改application.properties中的spring.datasource.username配制

Dockerfile Maven的特性

默认,构建Dockerfile的过程包含在mvn package阶段;

默认,为Dockerfile打标签的过程包含在mvn package阶段;

默认,发布Dockerfile的过程包含在mvn deploy阶段;

也可以直接陆续执行:

mvn dockerfile:build

mvn dockerfile:tag

mvn dockerfile:push

如运行出现 “No plugin found for prefix ‘dockerfile’ in the current project and in the plugin groups [org.apache.maven.plugins”我们需要在settings.xml里把这个插件加入白名单

<settings>

<pluginGroups>

<pluginGroup>com.spotify</pluginGroup>

</pluginGroups>

</settings>

跳过Dockerfile相关的构建、发布

如果要临时跳过所有的Dockerfile相关的所有3个goals,执行如下Maven命令:

mvn clean package -Ddockerfile.skip

如果只是想跳过某一个goal,执行如下Maven命令:

mvn clean package -Ddockerfile.build.skip

mvn clean package -Ddockerfile.tag.skip

mvn clean package -Ddockerfile.push.skip